| Power On |

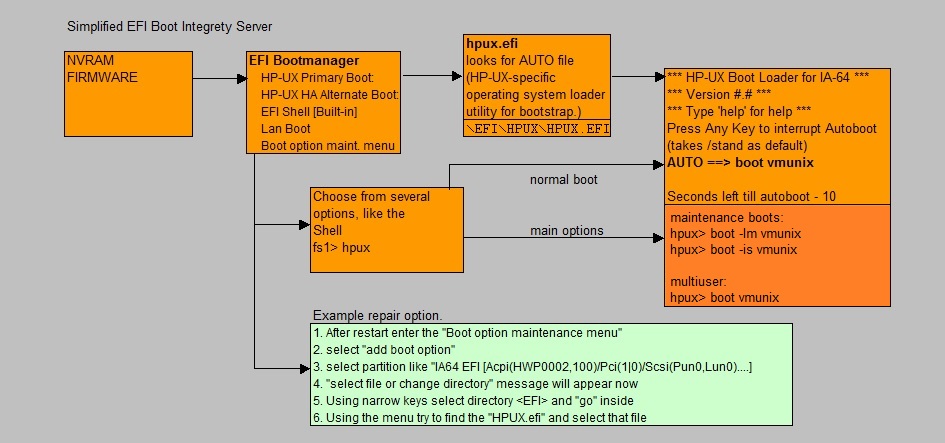

EFI Firmware |

| -> |

EFI System Partition is accessed |

| -> |

EFI Boot manager displays (choose an OS or shell) |

| -> |

If chosen HPUX -> [HP-UX Primary Boot: for example: 0/1/1/0.1.0 (from NVRAM)] |

| -> |

HPUX.EFI bootstrap loads. Reads the AUTO file (typically contains "/stand/vmunix") |

| -> |

HPUX.EFI will then load "/stand/vmunix" (the kernel) |

| -> |

The kernel initializes and loads modules and initializes devices/hardware paths |

| -> |

"init" starts. It reads the "/etc/inttab" file. |

| -> |

From "inittab", several specialized commands are started like "ioinit" |

| -> |

"init" reads the "run level" (default 3). |

| -> |

the "rc" execution scripts (for that run level) run from "/sbin/init.d" |